Lesson-5

hi, this is blackhat again hope you liking these articles so in the previous lesson we have learned how to compute loss now in this lesson we gonna cover how to make a loss as minimum as possible.

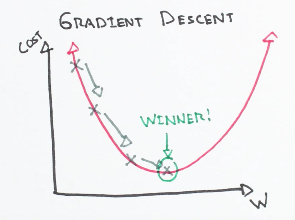

well, wouldn't it be nice if we had some sort of direction guide which gives us lower loss than we obtain before so here's the gradient descent came into the play...

didn't get a word don't panic I'll explain it...

suppose you have set your goal to become a machine learning expert so if every day you were contributing one hour or more for learning and applying things then great you are getting one step ahead this is called gradient descent.

got the full-understand excellent!

all doubts clear nice now you may ask

step 1 => consider a bowl-like shape...

step 2 => choose any random starting point w1 mostly people choose 0.

here we have chosen a starting point slightly greater than zero.

step 4 => now we can recompute the gradient and repeat step3 until we reach our goal.

Learning rate => " learning rate is nothing but the distance between the starting point and the next point "

learning rate = current position * number of steps ahead

1. if we choose a really small learning rate then we'll take a bunch of teeny tiny gradient steps, requiring a lot of computation to reach the minimum or goal.

3.if we choose learning rate so that is neither too big nor too small then it is called the ideal learning rate

Simple explanation! understand the Gradient Descent algorithm and Loss

well, wouldn't it be nice if we had some sort of direction guide which gives us lower loss than we obtain before so here's the gradient descent came into the play...

➧ gradient decent?

"Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function."

didn't get a word don't panic I'll explain it...

suppose you have set your goal to become a machine learning expert so if every day you were contributing one hour or more for learning and applying things then great you are getting one step ahead this is called gradient descent.

got the full-understand excellent!

➧ now we have two types of gradient descent

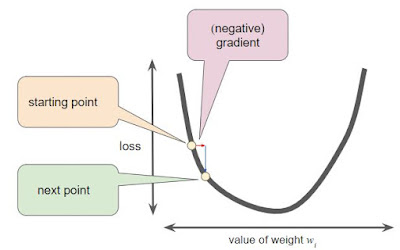

- first when we are progressing then we can say that the distance to the goal is decreasing so it is negative gradient descent which is good right.

- second when we are not contribution even a single minute to learning then we can say that the distance from us to the goal is increasing(because the competition in the market is increasing day by day due to that you may lose interest) so it is positive gradient decent.

all doubts clear nice now you may ask

Q. how we can find gradient decent

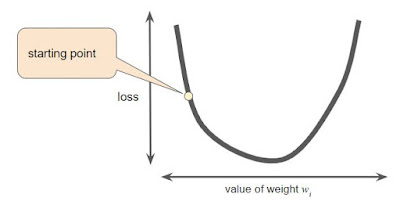

ans. don't worry I'll explain itstep 1 => consider a bowl-like shape...

step 2 => choose any random starting point w1 mostly people choose 0.

here we have chosen a starting point slightly greater than zero.

step 4 => now we can recompute the gradient and repeat step3 until we reach our goal.

we just described an algorithm called gradient descent. we start somewhere and we continuously take steps that hopefully get us closer and closer to some minimum.

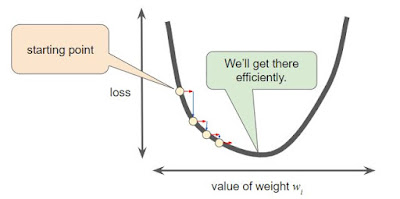

Q. How large of a step should we take in the direction of the negative gradient?

ans. well, that is decided by the learning rate.Learning rate => " learning rate is nothing but the distance between the starting point and the next point "

learning rate = current position * number of steps ahead

➧ how to choose the learning rate:

1. if we choose a really small learning rate then we'll take a bunch of teeny tiny gradient steps, requiring a lot of computation to reach the minimum or goal.

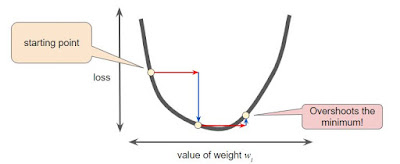

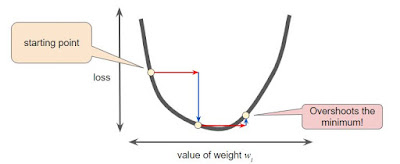

2. however if we choose the learning rate which is very large then we'll take a large step in direction of negative gradient. potentially it may leave local minimum(the goal) behind which makes reaching the goal even more difficult, this would cause your model to diverge.

3.if we choose learning rate so that is neither too big nor too small then it is called the ideal learning rate

now, this looks just right.

Thanks!!! for Visiting Asaanhai or lucky5522 😀😀

please post some more lectures...it's very easy and interesting studying from them.

ReplyDeleteSure I'll.... ;)

Delete